[disclaimer: I do not speak on behalf of my employer. Views are my own, expressed with AI assistance]

As educators we’ve already started to face an unprecedented challenge: the rise of AI-based academic dishonesty. For the past year, the issue has moved from being a distant sci-fi hypothetical to a disruptive reality infiltrating our classrooms and undermining our teaching methods.

Many of us have comforted ourselves with the belief that AI detection software would rise to the challenge of identifying artificially generated content, just as plagiarism software arose to address the challenges created by the internet.

Maybe someday. For now, we have no reliable defense against AI-based academic dishonesty. None.

As I learned in this video, OpenAI — the team behind ChatGPT and current leaders in the field — pulled their AI Classifier for being too error prone. They’re frantically building and testing its replacement. But if you read between the lines about the progress so far, there’s little reason for optimism about the new model either.

“Our classifier is not fully reliable. In our evaluations on a “challenge set” of English texts, our classifier correctly identifies 26% of AI-written text (true positives) as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time (false positives). Our classifier’s reliability typically improves as the length of the input text increases. Compared to our previously released classifier, this new classifier is significantly more reliable on text from more recent AI systems.” — Open AI

A 26% true positive detection rate? A 9% false positive rate? Let’s be clear, we’re talking about a system which only catches 1 in 4 instances of academic dishonesty, and of those, guides you to falsely accuse almost one out of ten of the students it flags.

If the top-tier geniuses at OpenAI are unable to definitively recognize and differentiate output from their own model, then how performant do you think the software from companies like Turnitin is?

If you haven’t experimented with the paid version of ChatGPT or the comparably powerful Claude 2 (free open Beta, released in July), then you might still be comforting yourself with Turnitin’s claim made in an April blog post. Back then, they announced a 97% detection rate and 1% false positive rate. Cool, that’s pretty solid. But that was against GPT-3 era models. The latest iterations? Not a chance. The might of GPT-4 leaves this software “about as effective as an umbrella in a hurricane” (that vivid analogy was GPT-4’s rephrasing of something that, left to my own devices, I would have wasted a whole paragraph including).

True, we as professionals can often notice a mismatch between tone. We can pick up on telltale language patterns that students clumsily leave in. If the paper begins with a copy-pasted “as a large language model, I am unqualified to render an opinion on…” then sure, the jig is up. But relying on the occasional incompetence of a violator is a deeply inadequate approach, provided that we care about fairness and rigor.

With OpenAI’s announcement, even the illusion that out-of-class graded essays remain a viable mechanism for evaluating student learning can no longer be sustained. Period. OpenAI has faced the difficult reality about their software product. As we head into the Fall Semester, we have no choice but to do the same regarding our educational product.

The Obstacle Is The Way

In the face of this imminent challenge, some prefer AI bans and plan a return to in-class writing to ensure students aren’t cheating. These approaches strike me as reactionary, and a missed opportunity. As I see it, the way to deal with skill inflation — everyone getting access to an ability without “earning” it — is to raise the bar on our expectations, not to restrict the learner’s toolset. The best solutions are never about building higher walls; they’re always about shifting the paradigm. Rather than viewing AI as a nemesis in education, I believe and have vocally advocated that we need to embrace it as a tool for transformative learning experiences.

I’m in good company. Some forward-thinking institutions, such as Harvard, have already started requiring students to work with AI. This isn’t just a begrudging acceptance of the inevitable. It’s a strategic move to prepare students for a future where AI is an integral part of professional and daily life.

A transformative shift is not just necessary, it’s overdue. The longstanding focus of our traditional, industrial-era education model continues a focus on building and rating individual proficiency in technical skills. Those skills are now effortlessly executed by AI, calling our value proposition into question even if we could attain perfect academic integrity. But this isn’t new. For the past several decades of accelerating automation, it has been increasingly evident that the economy doesn’t need obedient cogs in the machine who abide by fixed rules, but rather we need more and more collaborative graduates with extreme adaptability and creativity.

Integrity. Courage. Cooperativeness. Vision. Empathy. It’s no secret that these “soft skills” are what employers prize in workers. They scream it from the rooftops in survey after survey.

We like to imagine that writing papers free of plagiarism means a student has integrity, and we like to hope that the ability to take a derivative means someone will have the competence to build a sturdy bridge. But these skills only loosely correlate with what we actually want in employees and citizens. Our curriculum and associated methodologies are more of a proxy for what matters in life, rather than the thing itself.

I make this point to hopefully allow you to let go of approaches that no longer serve us. I want to remind you of our overarching goal: producing high functioning, highly employable, holistically equipped graduates at scale that are ready to thrive in the 21st century. Isn’t that what we all want? Isn’t that what we’re all here to do? I’m asking you, dear reader, to put aside your AI skepticism and not get bogged down with attachment to the particulars of how we used to try to achieve our goal.

Raising The Bar

Maybe a concrete example of how AI can be a tool to expand our horizons would be useful. A student that I work with often knows about my enthusiasm for AI. He walked in as I was writing this section and asked if ChatGPT can help him understand graphing problems. “Sure!” I answered, and asked him to give me a homework problem. The one he chose requires the visual component of graphing, which seems at first glance like it would be a problem for a text-only interface like ChatGPT.

“Use graphing techniques of scaling and translations to sketch the graph of the polynomial function f(x) = -x² — 7.”

But no problem. Because of my familiarity with AI and its capabilities, I know that it’s a fantastic coder, so I simply asked it to write me a Python program that I can copy and paste into a Google Colab notebook in order to see visual displays.

From there, I just have to click run and I get this:

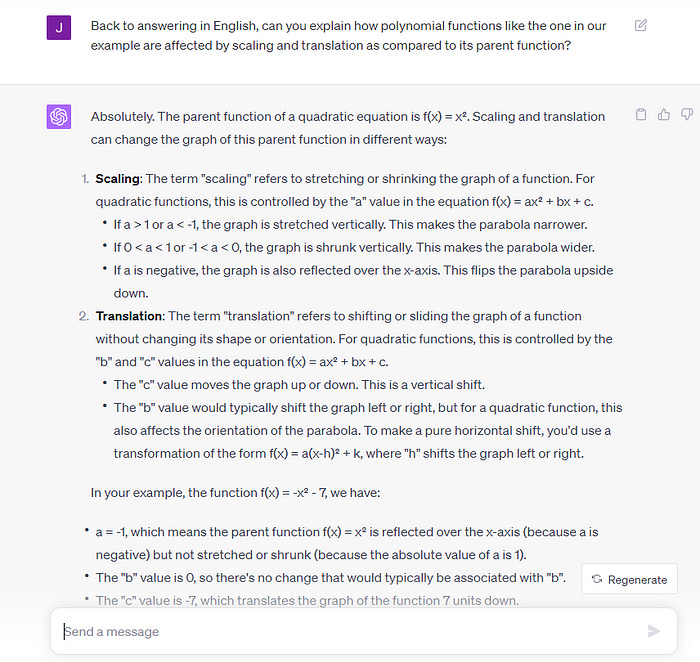

Note that although I happen to understand the Python code because of my programming hobby, it is no longer necessary to speak Python in order to access its incredible power. We’ll come back to that. Anyway, after showing some other visitors in my office that ChatGPT could translate outputs into German, I asked ChatGPT to explain the math in greater detail.

As you can see below, it does just as thorough a job as I could, which was my point to the student (who is very curious!). It’s as if he has me for free at his disposal 24/7 to answer anything he wants to know, except ChatGPT is knowledgeable about an infinitely broader range of topics, and it has an infinitely greater amount of patience. It will keep explaining in different ways over and over again until he’s sure his comprehension of a term or technique is perfect. Observe.

After seeing this, it’s possible you’re feeling a pit of dread in your stomach. And I get it. It’s natural to wonder if AI tools like ChatGPT may replace our jobs and threaten our livelihoods. I respect the validity of this concern, but see our relationship with AI as much more symbiotic than competitive. I elaborate on my views regarding job security more here.

Anyway, back to the concept of raising the bar. As you can see, if we’re teaching them to use AI, we can expect quite a lot more out of the student than they’d be able to do all by themselves. Even I would struggle to write the Python code above, because I’m not so familiar with the particular commands and syntax of the matplotlib library and would have to dig around in the docs to find what I need. But now I don’t need to be familiar; ChatGPT is! To help understand the skill of graphing quadratic equations, users can leverage much more complex skills (Python, Jupyter notebooks) without having to possess a skill directly.

The implications of this are profound.

Imagine students in a business class no longer being confined by a lack of technical skills to just theorizing a startup idea and writing an essay about it. They can now reasonably be expected to build, deploy, and test their own prototype apps in collaboration with ChatGPT, despite not knowing how to program!

Nevermind writing essays about the facts of the civil war; why not challenge students to use ChatGPT to code a trivia website or interactive map.

Rather than trying to preserve the human purity of an essay, you can let students use ChatGPT to their heart’s content and just count that part of the assignment as a draft. Most points come from their performance on a YouTube presentation of the contents of that essay, promoting many more public speaking opportunities into the curriculum and potentially even helping them to build a portfolio and a channel.

As it stands today, students currently write essays designed to be read only by you (and you suffer through the reading only because you’re paid to). But if we have students use AI, they can express their ideas in a much more publicly accessible style and actually have a voice in our real world democracy, participating as digital citizens rather than merely analyst observers. How do I know with such certainty that this can be done? As I mention in every article, I always incorporate ChatGPT into my own workflow and utilize its best suggestions, which is the reason this article was compelling enough to get you to read to the end! It isn’t me alone, and it isn’t ChatGPT alone. It’s a Human-AI team which is greater than the sum of its parts.

The possibilities of such teams are endless. I’m just scratching the surface in this article, and we’re only at the very beginning of what I’m sure will be a long conversation. In upcoming articles I’ll write about all the most innovative AI integration techniques that I see my colleagues using, so if you want to be part of the discussion, feel free to comment below and be sure to subscribe.